Understanding Large Language Models: The Future of AI 🌟

Within the ever-changing landscape that is artificial intelligence, LLMs seem to be an exciting technology that has come up to the surface. Indeed, the these systems have the ability to comprehend, create, and even modify human language, which will revolutionize many industries. This blog examines LLMs from the inside by analyzing their architectural design, functionality, and intended use as well as possible issues revolving around them.

What Are Large Language Models? 🤖

Large Language Models are advanced artificial intelligence systems that are used to read and write text in a manner similar to a human. They are trained via massive amounts of data and uses deep learning for text prediction and generation. With this versatility, the LLMs can engage in a series of tasks such as translation, summarization, and creative tasks.

The Evolution of Language Models 📜

The development of language models started out with less complex structures. The shift from Recurrent Neural Networks (RNNs) to the Amos transformations indicates the onset of a revolutionary stage in artificial intelligence. In the beginning, text was entered into RNNs in the form of one word at a time, which incurred problems of context management over longer spans of time. The emergence of the transformer model in 2017 changed this trend.

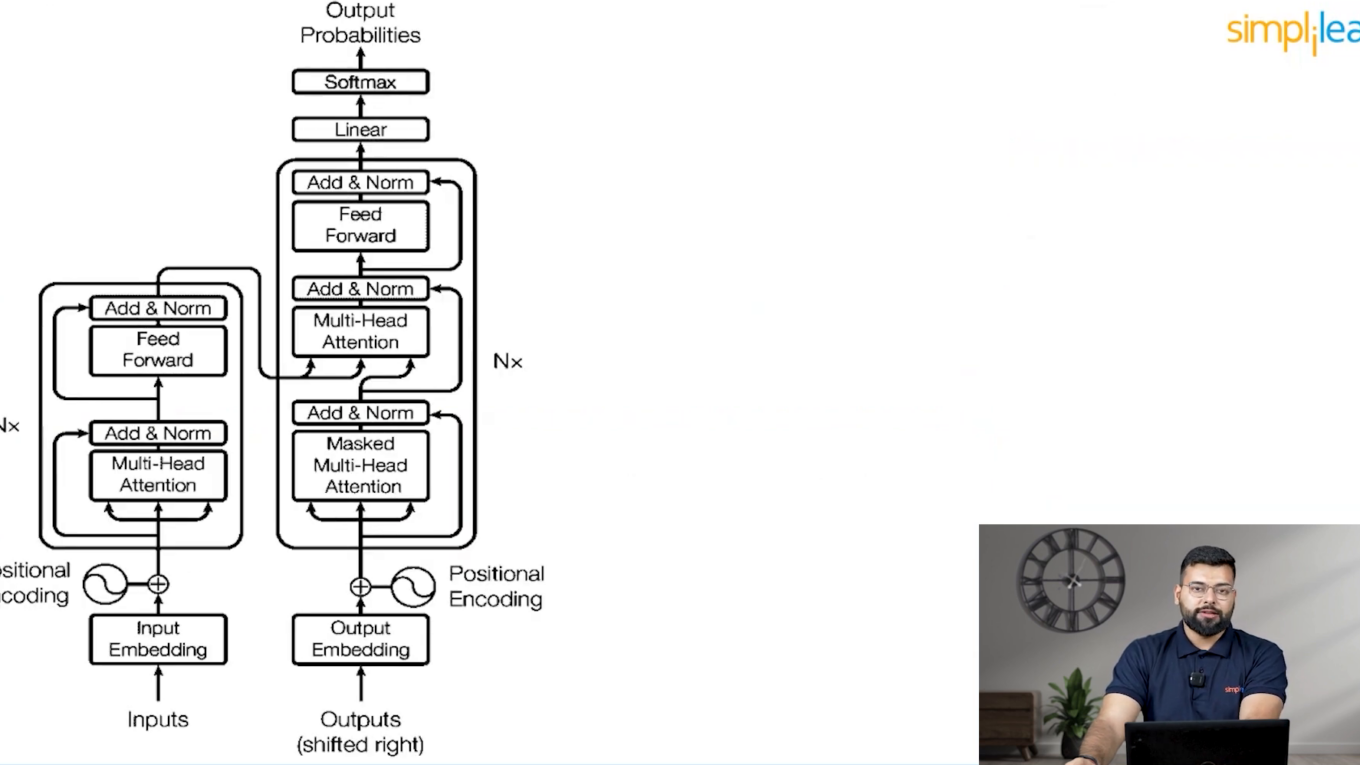

The working of transformers is based on attention mechanisms and therefore, the model is capable of looking at an entire sentence or a whole paragraph at once. Such ability is highly beneficial to the comprehension of context and meaning and is one of the most important features of natural language processing in the present day.

How Transformers Work 🔍

Tokens can be created as part of the operating procedure of transformers that further include tokenization, encoding, and embedding. A text document is then broken down and placed into tokens, which are further encoded. In this case, the representations are converted into embeddings, which are multi-dimensional vectors that provide sense to the token.

The encoders take these embeddings as input and produce an additional summary which in this case is a context vector that encompasses the whole input information. This context vector is very important for the decoder which reconstructs other text aiming to this learned context.

Capabilities of Large Language Models 💡

The development of LLMs has resulted in a wide range of benefits applicable in several areas:

- Text Generation: LLMs have been able to put together coherent articles, stories as well as poetry.

- Summarization: They can be used to cut down long documents to the main points, a very helpful task especially in industries.

- Translation: LLMs are able to make translations of written text, which helps people all over the world.

- Classification: They interrogate the authored text based on sentiment, subject matter and subjecting it to toxic language detection and classification.

- Chatbots: LLMs provide an effective interface for virtual assistants that are able to communicate with users in a natural manner.

Real-World Applications of LLMs 🌍

The use of LLMs has crossed various industries with the following examples:

- Healthcare: LLMs help predict the structure of proteins, detect diseases, and evaluate patient data leading to advancements in the medical field.

- Finance: They provide summaries for calls concerning quarterly profit among other tasks related to financial information and texts that are relevant for making decisions.

- Retail: LLMs use active conversational bots to enhance customer experience in shopping.

- Software Development: They help writers write codes and fix bugs which makes the work of development efficient.

- Marketing: LLMs aid marketers in creating powerful marketing messages and even analyzing the responses of clients in a more structured manner.

How Do Large Language Models Learn? 📚

We primarily train LLMs using unsupervised learning techniques, allowing them to learn from large datasets without labeled data. They identify patterns and relationships within the data, enabling various tasks such as text generation and summarization.

There are different types of learning techniques employed by LLMs:

- Zero-Shot Learning: LLMs can perform tasks they weren’t specifically trained for.

- One-Shot Learning: They can learn from a single example to improve their performance on a specific task.

- Few-Shot Learning: LLMs can learn from a few examples, enhancing their ability to generalize.

Customization Techniques for LLMs ⚙️

We utilize several customization techniques to improve LLMs’ performance on specific tasks:

- Prompt Tuning: Adjusting the prompts given to the model to guide its responses more effectively.

- Fine-Tuning: Retraining the model on specific datasets to enhance accuracy.

- Adapters: Adding smaller networks to the model, allowing it to adapt to specific tasks without retraining the entire model.

Types of Large Language Models 🏷️

We categorize LLMs into three types based on their architecture:

- Encoder-Only Models: Excellent for understanding tasks like classification and sentiment analysis (e.g., BERT).

- Decoder-Only Models: Great at generating text (e.g., GPT-3).

- Encoder-Decoder Models: Ideal for tasks like translation and summarization (e.g., T5).

Advantages and Challenges of LLMs ⚖️

However, there are several obstacles that apply to LLMs.

Limitations:

- Extensibility: LLMs provide a baseline upon which people can develop application for a variety of needs.

- Flexibility: They can be used for different tasks and in different deployments.

- Performance: Fast and low-latency responses of high quality increase productivity.

- Accuracy: In longitudinal studies, this means accuracy improves with more parameters and a bigger training dataset.

- Efficiency: LLMs streamline unnecessary procedures which conserve time and resources.

Challenges:

- Development Cost: Requires considerable investment in purchase of hardware and large datasets.

- Operational Cost: Steady cost remains high for operating and sustaining large language models.

- Bias: Risks of adopting biases that have been accumulated regardless of training.

- Ethical Concern: Violation of privacy and misuse of generation of harmful content.

- Explainability: There are no clear explanations of reasons for how the models have produced such results.

- Hallucination: Content generated by the model may contain false or nonsensical elements.

The Future of Large Language Models 🔮

The prospects of LLMs are bright considering continual enhancement in the outcomes, efficiency, as well as applications of LLM technology. Businesses, in turn, are more active utilizing LLMs in a variety of work-related activities which results in greater productivity and drive for further innovations.

To sum up, Large language models are changing the face of the AI industry by their ability to create and comprehend written content similar to that of a human. They come with a cloud of issues but there is a huge opportunity for development. They will have to grow and adapt in order to be able to utilize these tools.

Moreover, more articles on the present trends of Artificial Intelligence will be available in our future publications, and, of course, do not forget to like, share, and subscribe! If you have any questions, please do not hesitate to ask in the comment section below.